Unlock faster reporting and accurate sales data with automated ETL

Understanding ETL: Extract, Transform, Load

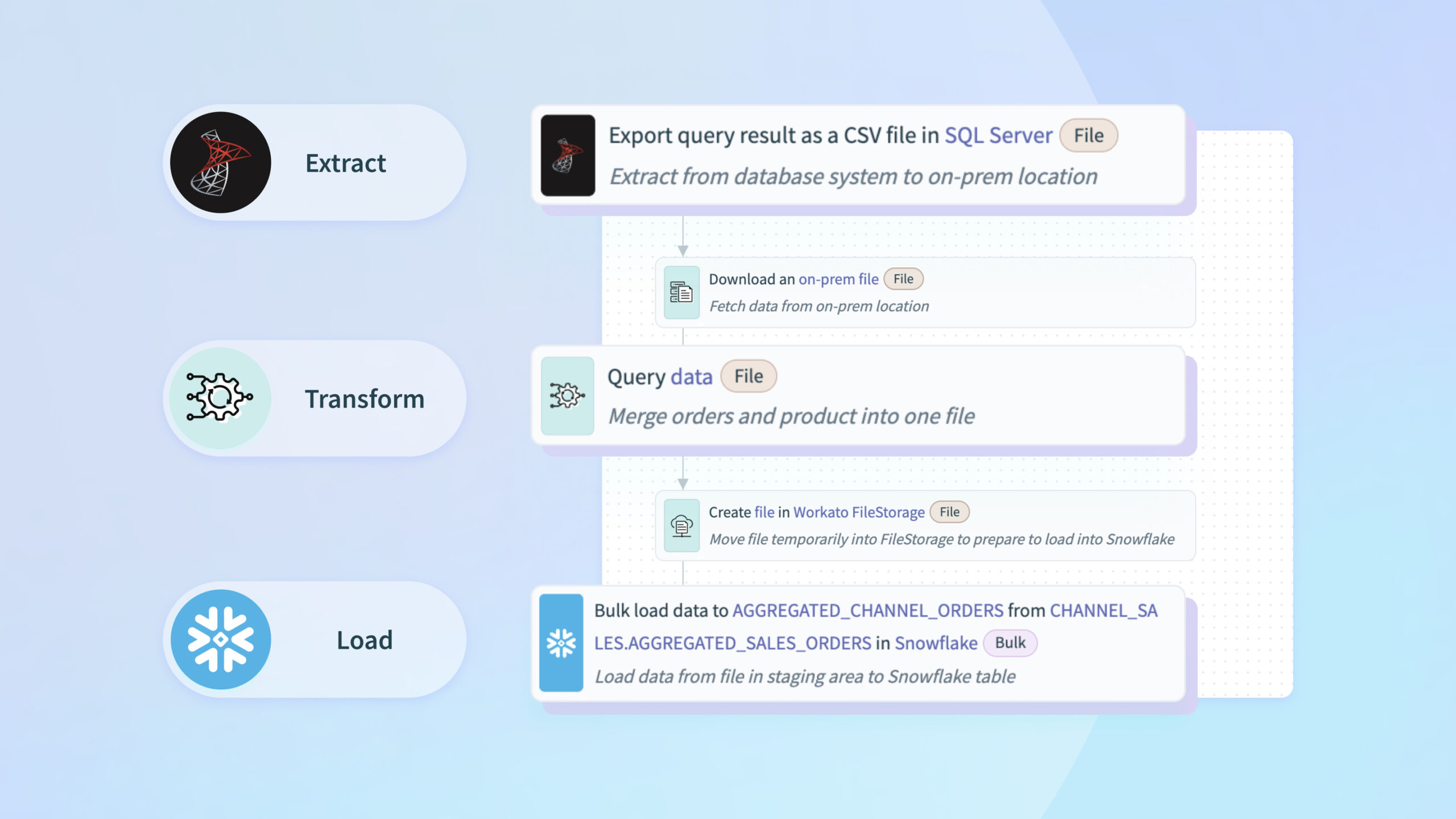

ETL stands for Extract, Transform, Load—a common data integration process that organizations use to gather data from various sources, transform it into a usable format, and load it into a data warehouse or data lake for analysis and reporting. The key steps in this process include:

- Extract: Data is gathered from different sources such as databases, APIs, SaaS applications, and files.

- Transform: Once extracted, the data is cleansed, enriched, and transformed. This can include tasks like data filtering, joining, aggregation, and applying business rules to make the data consistent.

- Load: The transformed data is loaded into a centralized destination, such as a cloud data warehouse like Snowflake, where it can be analyzed for business insights.

This process allows organizations to centralize, clean, and structure their data efficiently, which is crucial for accurate reporting and decision-making.

Automating ETL for E-Commerce Sales Data

This article focuses on automating the sales data management process for e-commerce businesses using MSSQL as the database. The automation fetches daily order data, standardizes it, and loads it into a buffer (e.g., Snowflake) for further processing and analysis. By automating this ETL process, you can ensure that your sales data is always accurate, up-to-date, and ready for reporting.

The automated workflow extracts sales orders from the MSSQL e-commerce database, enriches the data by merging it with product information, and loads the structured data into Snowflake for further analysis.

Recipe Walkthrough: Automating the ETL Process for Sales Data

To better understand how this automation optimizes sales data management, we’ll break down the ETL process into three key stages: Extract, Transform, and Load, while highlighting the tools used at each step.

Extract: Gathering Data from Source Systems

The Extract stage is where raw data is pulled from various systems and made ready for transformation. In this automation, sales data is extracted from an on-premises MSSQL database.

-

Trigger: Scheduler by Workato

The ETL process begins with a Scheduler, which triggers every 30 minutes. This ensures that data is extracted on a regular basis, maintaining up-to-date sales data without manual intervention.

Purpose: Automates the timing of data extraction, keeping data synchronized consistently. -

Generate File URL in On-Prem Files

A file URL is generated within your on-prem system to store the data temporarily. This establishes a secure location where the raw sales data can reside before transformation.

Purpose: Prepares a destination for holding extracted sales data, ensuring easy access during transformation. -

Export CSV from MSSQL

The recipe extracts daily orders from the MSSQL e-commerce database, exporting them as a CSV file. This typically includes orders placed, completed transactions, and other relevant sales data.

Purpose: Extracts relevant sales data in a structured format for further processing. -

Download File from On-Prem Files

The extracted data is downloaded from the on-prem system, making it accessible for transformation.

Purpose: Downloads the extracted data, making it available for transformation.

Transform: Enriching Data

Once the data is extracted, the Transform phase enriches and prepares it for loading into the target system. This is where raw data is cleansed, organized, and aligned with your business needs.

-

Query Data in SQL Transformations by Workato

Using SQL Transformation, the recipe enriches the extracted data by joining it with additional information, such as product details from another database. SQL provides flexibility to merge, aggregate, and filter data, ensuring the final dataset is tailored to your reporting needs.

Example: Combining sales orders with product information to get a full view of product performance during a specific period.

Purpose: Cleanses and enriches the raw data to provide a complete view of sales and inventory data for reporting. -

Store File in Workato FileStorage

After the data is transformed, it is temporarily stored in Workato FileStorage. This is a secure location where the transformed data is held before it is moved into Snowflake.

Purpose: Safely stores the transformed data temporarily, ensuring data integrity during the process.

Load: Moving Data into the Target System

The final stage of ETL is Load, where the transformed data is uploaded to a destination (in this case, Snowflake) for analysis. The data is prepared to be used for reporting and other business purposes.

-

Replicate Table Schema in Snowflake

The schema of the transformed data is replicated in Snowflake. This ensures that the structure of the data, such as column names and data types, aligns with the target schema in Snowflake.

Purpose: Prepares Snowflake to receive the transformed data by matching the schema. -

Upload File to Internal Stage in Snowflake

The data is uploaded to an internal staging area within Snowflake. Staging is a common practice in data pipelines, where data is held temporarily before being fully loaded into the final destination table.

Purpose: Transfers data into Snowflake’s staging area, preparing it for the final load. -

Bulk Load Data to AGGREGATED_CHANNEL_ORDERS

The transformed data is bulk-loaded into the

AGGREGATED_CHANNEL_ORDERS</strong> table within Snowflake. Bulk loading ensures that large volumes of data are inserted quickly and efficiently.

Purpose:Completes the ETL process by loading the data into the final destination for analysis and reporting.

Error Handling with Logger by Workato

The automation recipe includes an error-handling mechanism using Logger by Workato. If an error occurs during any part of the process, the Logger captures and records the issue, allowing for quick resolution without interrupting the entire data pipeline.

Purpose: Ensures that any errors in the ETL process are logged and addressed efficiently, maintaining the reliability of the automation.

Why Automating ETL Matters

Each phase in this ETL process—Extract, Transform, and Load—is designed to streamline the flow of data, reduce manual tasks, and ensure your sales data is always up-to-date. By automating this workflow:

- SQL Transformations enrich and format your data to ensure it meets the specific needs of your analysis.

- FileStorage provides secure and temporary storage for transformed data, ensuring data integrity.

- Bulk Loading into Snowflake ensures that large datasets are transferred efficiently, enabling timely reporting and insights.

With this automated ETL pipeline, organizations can significantly enhance data accuracy, save time, and gain real-time insights into their operations.

Real-World Use Cases for ETL

Here are a few examples of how automating the ETL process can benefit different business areas:

- Sales Data Management In fast-paced sales environments, having up-to-date data is crucial for making decisions. Automating the consolidation and transformation of sales data ensures accurate and timely insights.

- Inventory Management For companies managing large inventories, automating the synchronization of stock data across systems helps prevent stockouts or overstocking.

- Financial Reporting Automating the ETL process for financial data reduces the complexity and time needed to generate accurate reports, ensuring compliance and improved decision-making.

Why Workato for ETL?

Workato isn’t just an ETL tool—it’s a comprehensive data orchestration platform designed to manage the entire flow of data from ingestion to transformation, and ultimately, activation (reverse ETL), making it ideal for enterprises dealing with large, complex data environments. Its platform offers scalability, flexibility, and reliability across every stage of the data process.

Key Benefits of Workato’s Data Orchestration Platform

- Orchestrating Data Flows at Scale

Workato seamlessly integrates data from a wide range of sources—cloud, on-prem, files, APIs, and data warehouses—using over 1,200 pre-built connectors. It consolidates data flows into a unified system, enabling businesses to automate multi-stage pipelines while handling large-scale data ingestion and dynamic schema changes. - Automated Ingestion and Flexible Transformations

Workato automates the extraction and loading of data into cloud data warehouses or lakes, significantly reducing operational overhead. It supports push-down transformations within platforms like Snowflake, while offering SQL, Python, JavaScript, and Ruby for more complex transformations, enabling users to customize data handling to fit their needs. - Activating Data Across the Enterprise

Workato goes beyond traditional ETL with reverse ETL capabilities, pushing insights from data warehouses back into operational systems such as CRMs or ERPs. This allows teams to automate workflows and make real-time decisions using actionable data, making data orchestration a driver for immediate business outcomes. - Data Privacy, Compliance, and Security

With features like data masking, role-based access control, and audit logging, Workato ensures that data pipelines remain secure and compliant with regulatory requirements. Real-time monitoring and enhanced observability provide transparency and control over data, ensuring operational reliability across the enterprise.

Start Building with Workato

Ready to automate your ETL process? Get started quickly by duplicating the ready-to-use ETL automation recipe template from Workato’s Community Library. You can easily customize it to fit your needs.

For those eager to explore more, check out our additional resources:

- Visit docs.workato.com for comprehensive documentation

- Workato’s Data Orchestration platform

- Data Orchestration docs