Product Hour

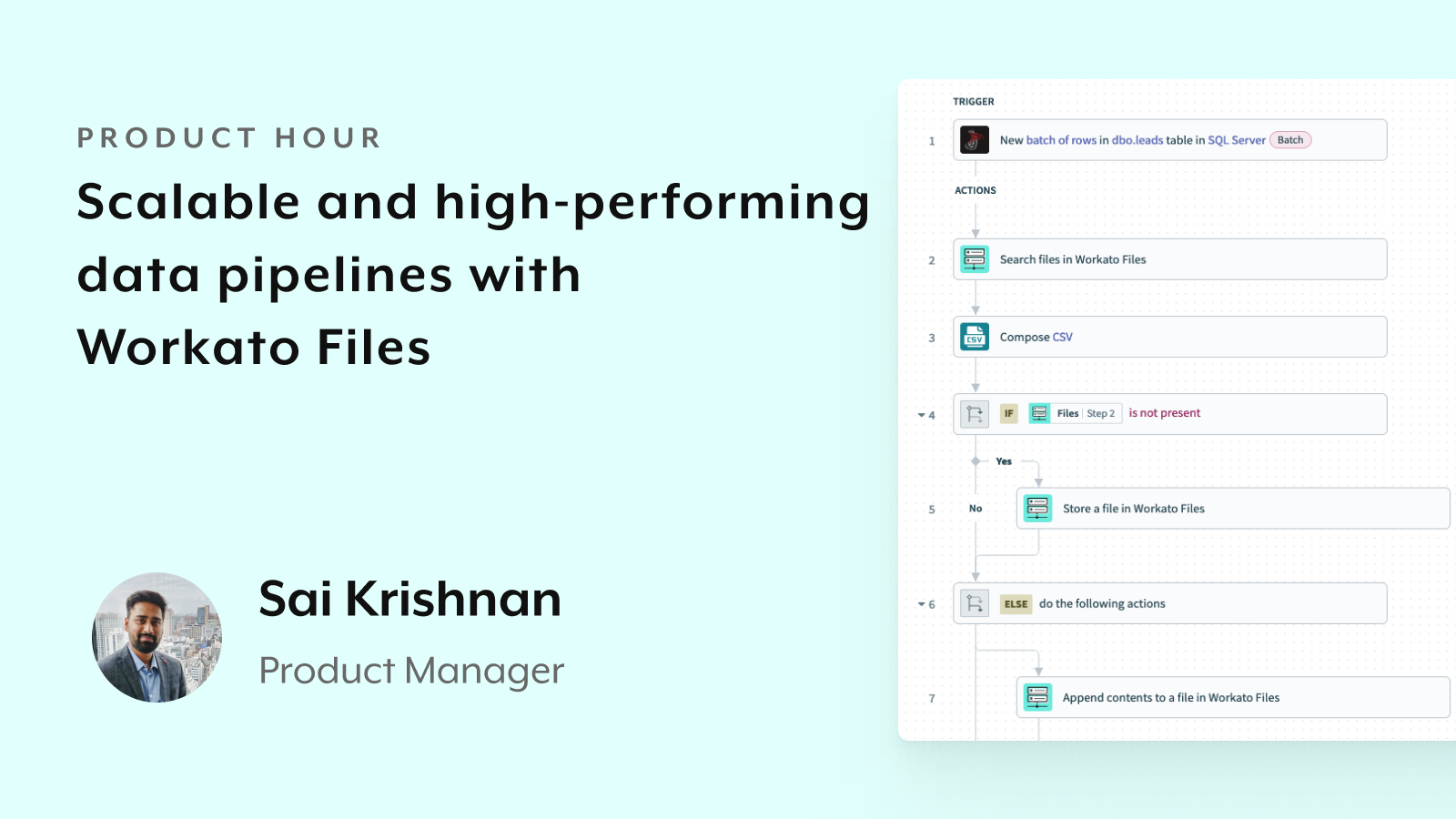

Scalable and high-performing data pipelines with Workato Files

Register to watch on-demand

Published:

Sep 2022

Why should you watch?

When moving large volumes of data from one application to another, or building a data pipeline for data lakes or warehouses, scalability and higher throughput are essential.

However, API rate limits, batch size restrictions and other constraints in applications often create challenges for recipe builders. These limitations complicate recipe design to optimize for faster data processing, and operations by having to manage dependencies between multiple processes..

Join this Product Hour session to learn about the newly released Workato Files service, and how it can be used to simplify recipe design, operations, and increase performance for large volume data transfers.

What will you learn?

- Introduction to Workato Files

- How to use Workato Files connector

- Working with large files with streaming

- Other common use cases

- Sneak peek into the roadmap