October 2020 – Product Updates

As the world looks ahead to the future of work, automation takes centerstage to drive efficiencies, speed, and faster business outcomes. Our October product updates will help you create event driven automations with Kafka and Confluent Cloud connectors, uplift your RevOps with Outreach automations, and give more control on orchestration.

- Multi-select menu in Slack

- Direct link to recipe steps

- Skip steps within recipes

- API path parameters

- OPA Smart Shunt for Databases

- Async/await for callable recipes

- Outreach connector

- Confluent/Kafka connector

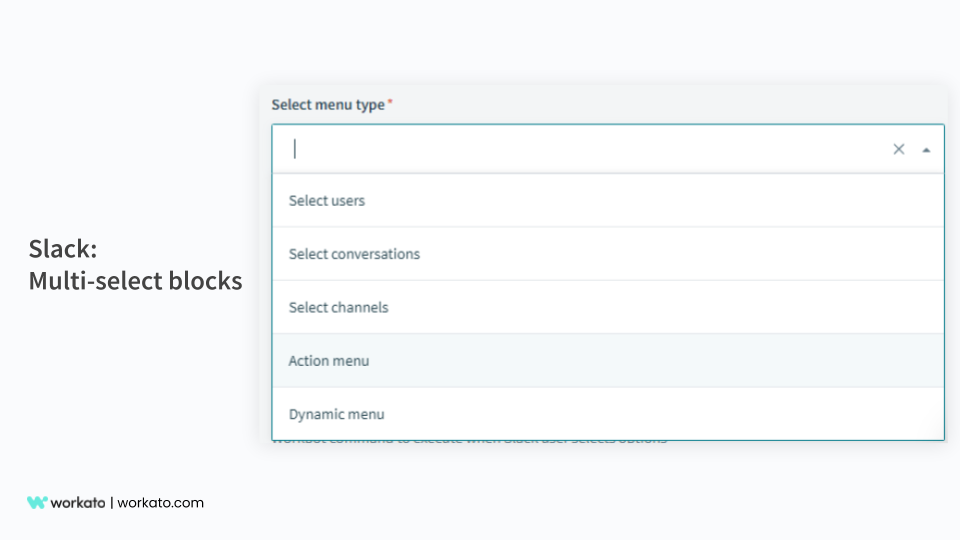

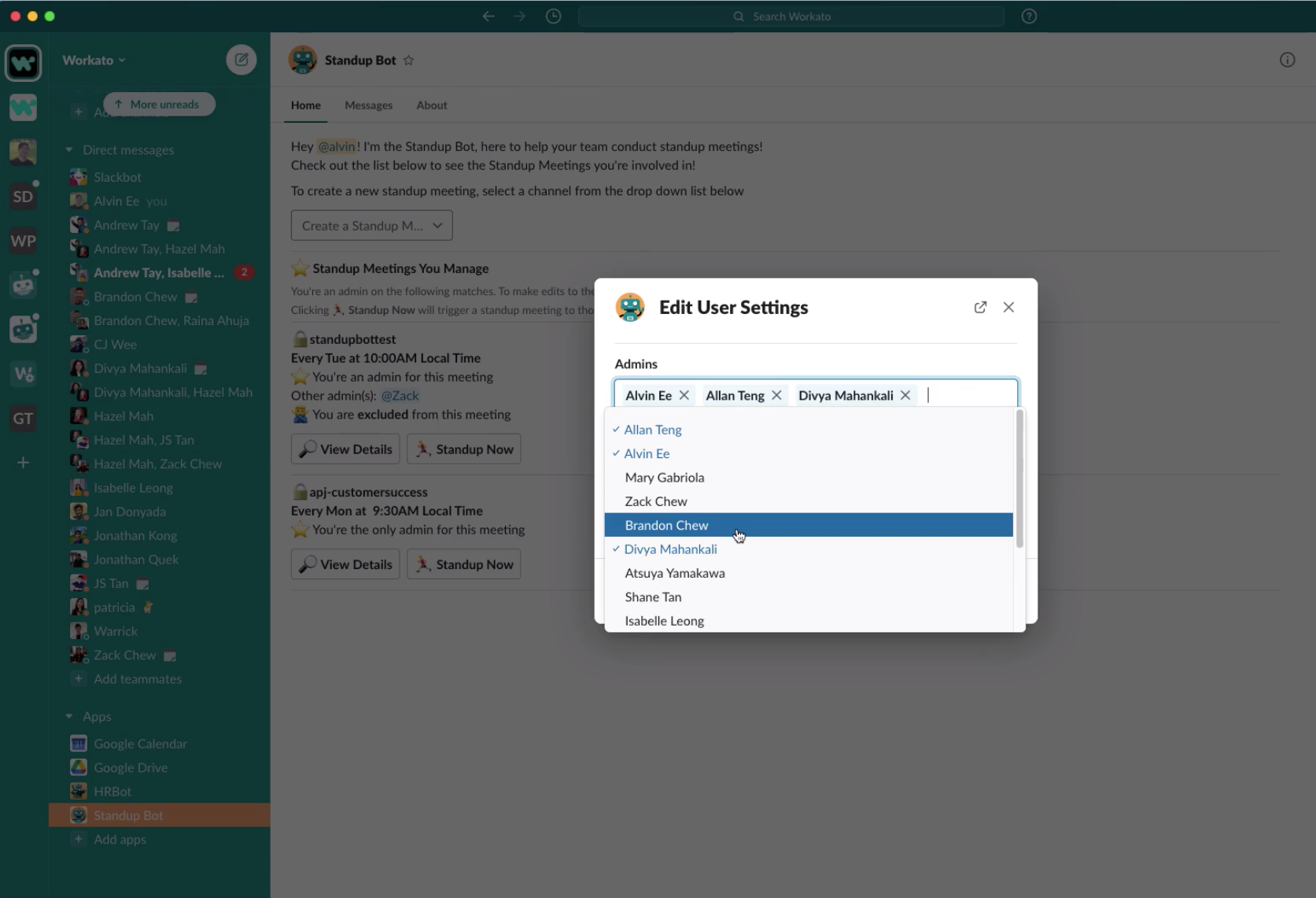

Do more with multi-select menus in Slack

Slack Multi-select block

It is common to use the select menu element when creating an interactive app for Slack using Workbot. The regular select menu is effective when a user needs to choose a specific option like updating a status, assigning an owner, or selecting a category.

Selecting multiple users

The new multi-select menu allows the user to select multiple items from a list of options, like selecting multiple tickets to update, responding to a multiple answer survey question, adding multiple team members to a standup meeting, and more.

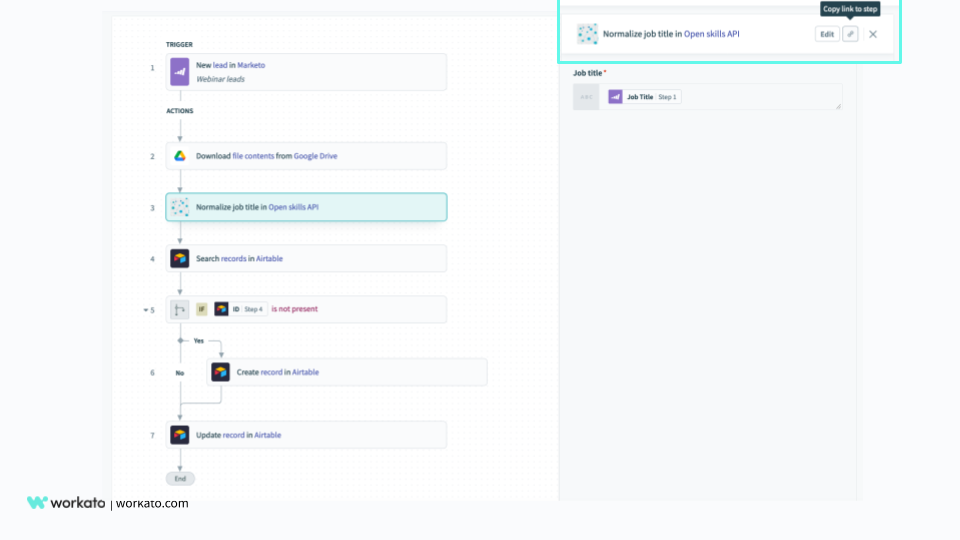

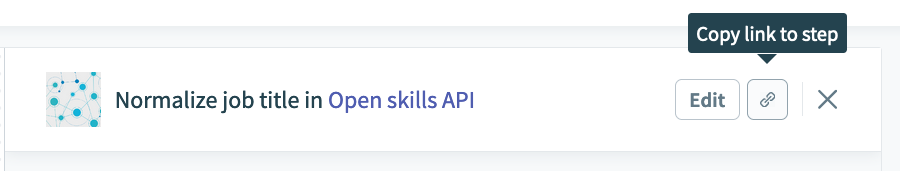

Sharing recipe steps just got easier

Copy link to Step

When multiple members of your team are working together on automation projects, there is often a need to share. Sometimes you may want to share a specific section or step in the recipe for your team to reference or review.

For example – you can share a recipe step that has a special JavaScript action to cleanse emails, address data for leads, or seek help with fixing an issue with a misbehaving transformation logic.

Copy Link to Step

In all these situations, you can easily get the URL to the specific recipe step using the “Copy link to step” option. Then you can proceed to share the copied URL with your team members.

When they click on the URL you shared, it will directly take them to the exact recipe step you intended them to review. This completely eliminates the hassles for sharing the recipe URL and expecting the recipient to find the step you want them to look at.

Faster testing and troubleshooting with Skip Steps

Did you ever want to selectively run specific steps in a recipe to test the logic or debug? Or selectively disable execution of certain steps to troubleshoot a runtime error? Or choose a set of steps to execute when re-running a job?

Now, you can do it all with the new skip steps feature.

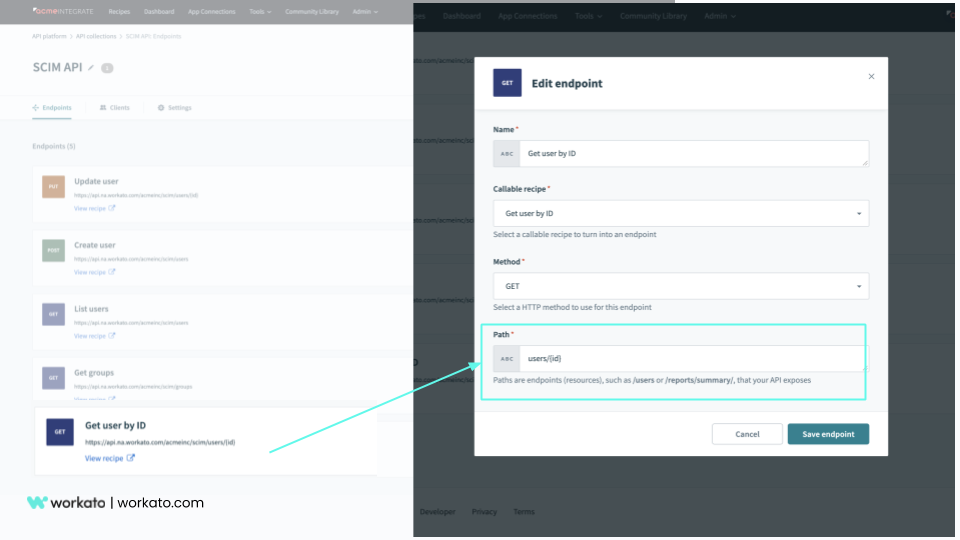

Simpler access to resources with API Path parameters

API Path Parameters

Path is a type of parameter that lives within the endpoint URI. In a typical REST scheme, the path portion of the URL represents entity class hierarchy.

Path parameters offer a more descriptive and distinct style, are more readable and easier to understand, they are the ideal choice when you want to identify a specific resource. It removes the complexity of building a body to deliver something as simple as a resource finder.

For Example:

Here is an API endpoint used to retrieve details of a specific user:

Request:

Get/users/{id}

The {id} part represents the parameter that is required for the call.

Response

{

"id":"510",

"email":"pbrown@workato.com",

"first_name":"Peter",

"last_name":"Brown",

"userName":"Peter Brown"

}

Visit our documentation to get started with API path parameters.

Modern data pipelines using Cloud ETL/ELT

Modern Data Pipelines

Over the past year, our product team has invested significantly in robust capabilities for you to build out reliable and high performance data pipelines. Earlier this year we had announced exciting updates building data pipelines for Snowflake and Google BigQuery.

Continuing with this trend and demand for modern data pipelines, we are launching a couple of powerful features that make data extracts from on-premise databases faster, and orchestration of pipelines simpler.

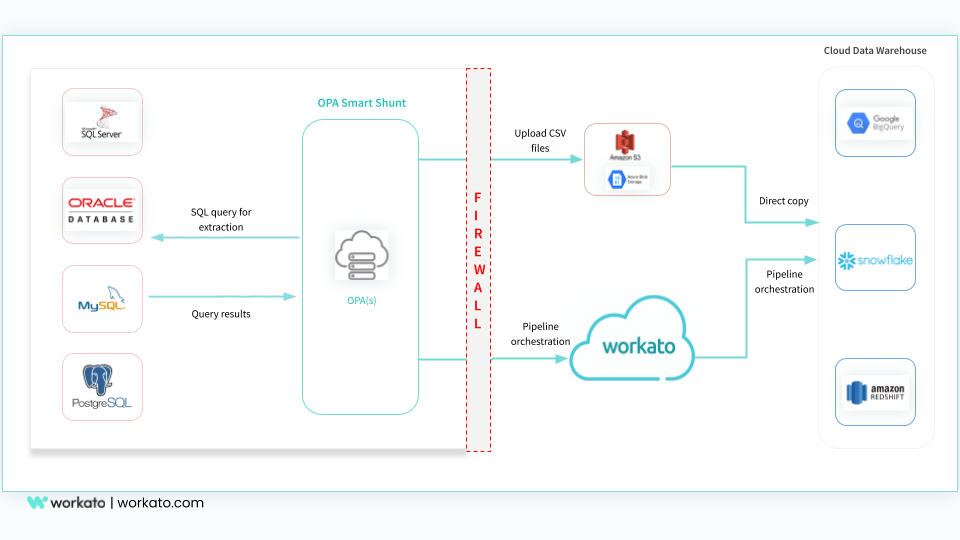

High performance data loads from on-premise databases

Smart Shunt for On-Prem Database extracts

OPA Smart Shunt, released earlier this year, has been used heavily for high performance data transfers from on-premise databases. The latest update to the OPA Smart Shunt now supports all major on-prem databases – Oracle, Microsoft SQL Server, MySQL, and PostgreSQL.

Additionally, you can continue to use the JDBC connectivity for extracting data from other databases e.g. Hadoop.

The OPA Smart Shunt is particularly useful in situations where you are looking to move high volumes of data e.g. 100s of GB or Terabytes from an on-prem database for higher throughput and faster loads. Common use cases include:

- Initial data load from on-prem databases to data lake or data warehouse

- Migrating from on-prem EDW to Cloud DW

- Large batch extracts from on-prem databases

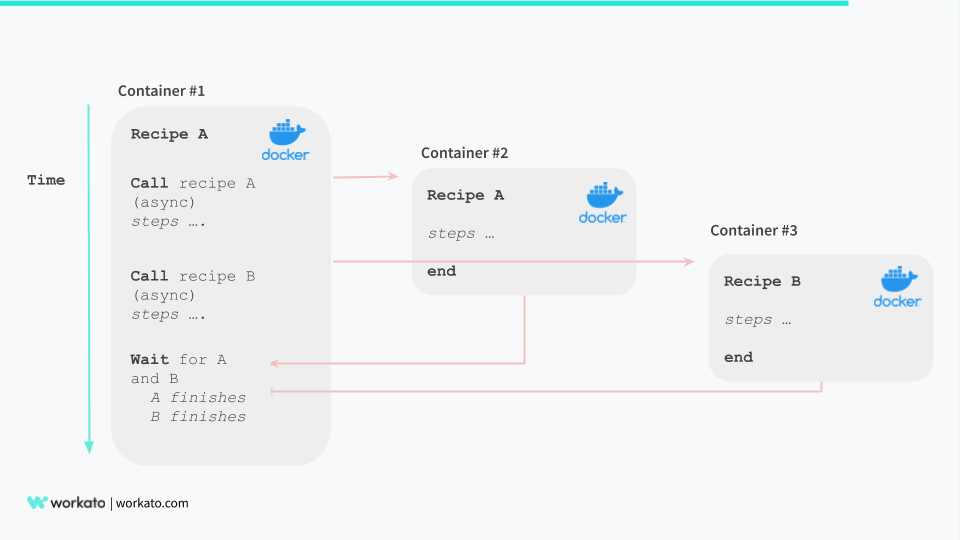

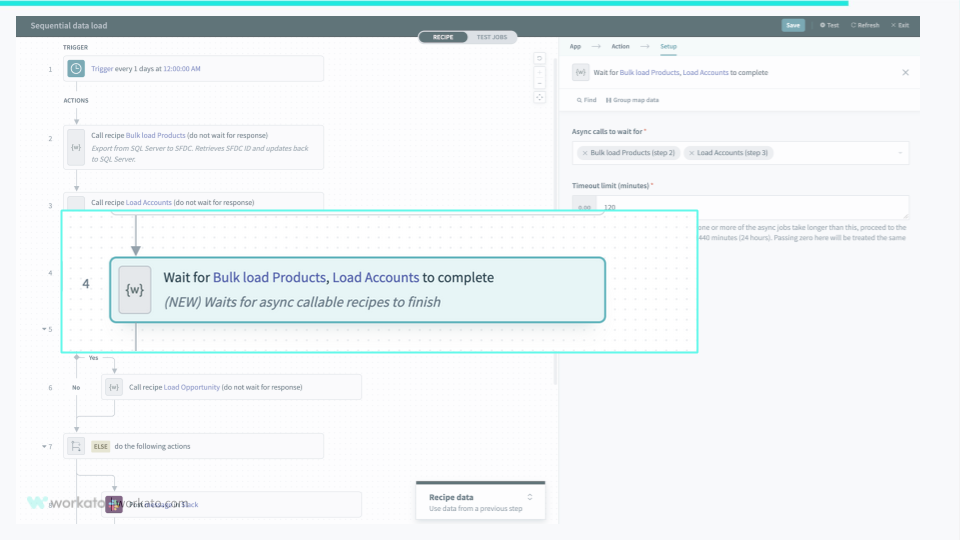

Reliable and efficient orchestration with Async/Await

Callable recipes – Async/Await

Teamwork is as essential for orchestration as it is for sports, business or any other activity. It involves sharing of responsibilities, coordination, and above all a sense of unity for working towards a common goal.

When running automations for business processes, data pipelines for loading data into data warehouses or other, it is often required to run a collection of automations. These automations must be organized in a way that reflects their relationships and dependencies.

Await callable recipe response

For example when loading of facts and dimension tables in your Data Warehouse you want to orchestrate the load such that the dimension table loads can run concurrently, but the fact table is loaded only after all dimension tables are loaded successfully.

This can be accomplished by setting up a main recipe that executes the dimension and fact table loads as callable recipes. With the new async/await feature for callable recipes, you can configure the callable recipes for the fact to wait for the successful execution of the dimension loads.

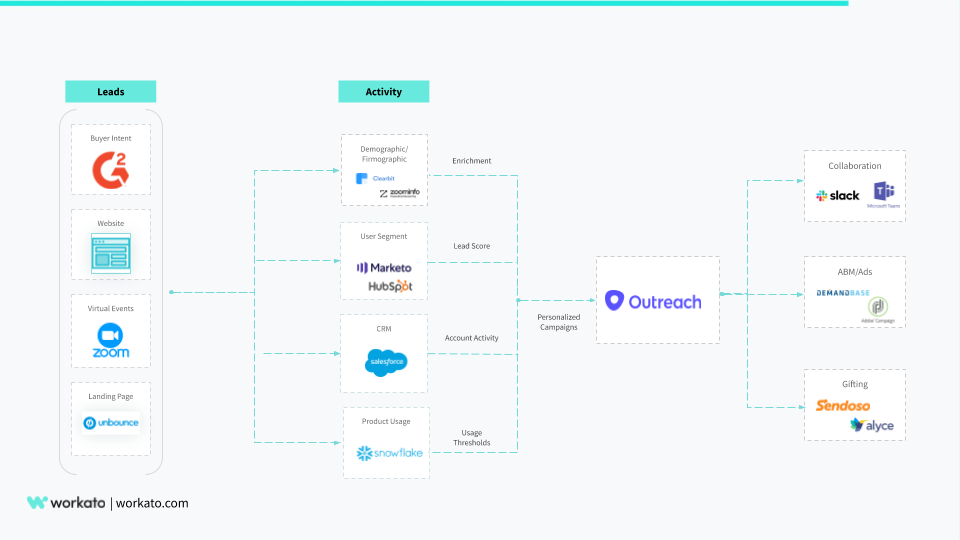

Maximize Outreach to drive growth and revenue uplift

Outreach Automations

Whether you’re in account-based, inbound, or outbound sales, Outreach is an important tool for you to connect to your leads and customers.

Now with the new connector for Outreach, you can build automations to automatically trigger email sequences in Outreach to:

- ensure sales reps immediately reach out to hot leads with real-time enrichment using Clearbit

- quickly connect with leads when lead scores exceed a threshold in Marketo

- automate and personalize campaigns using product usage data in Snowflake

- Drive higher conversions by using buyer intent data from G2 to inform your Outreach sequences

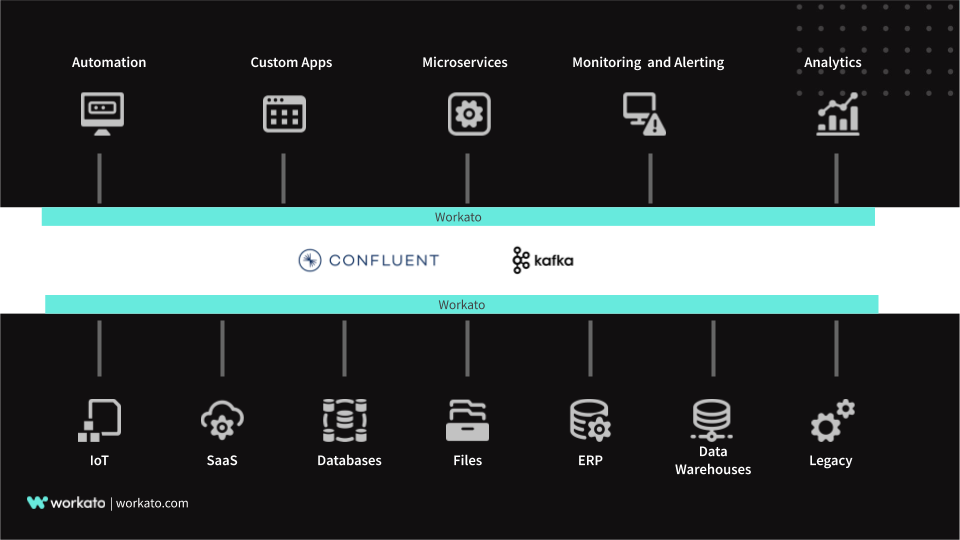

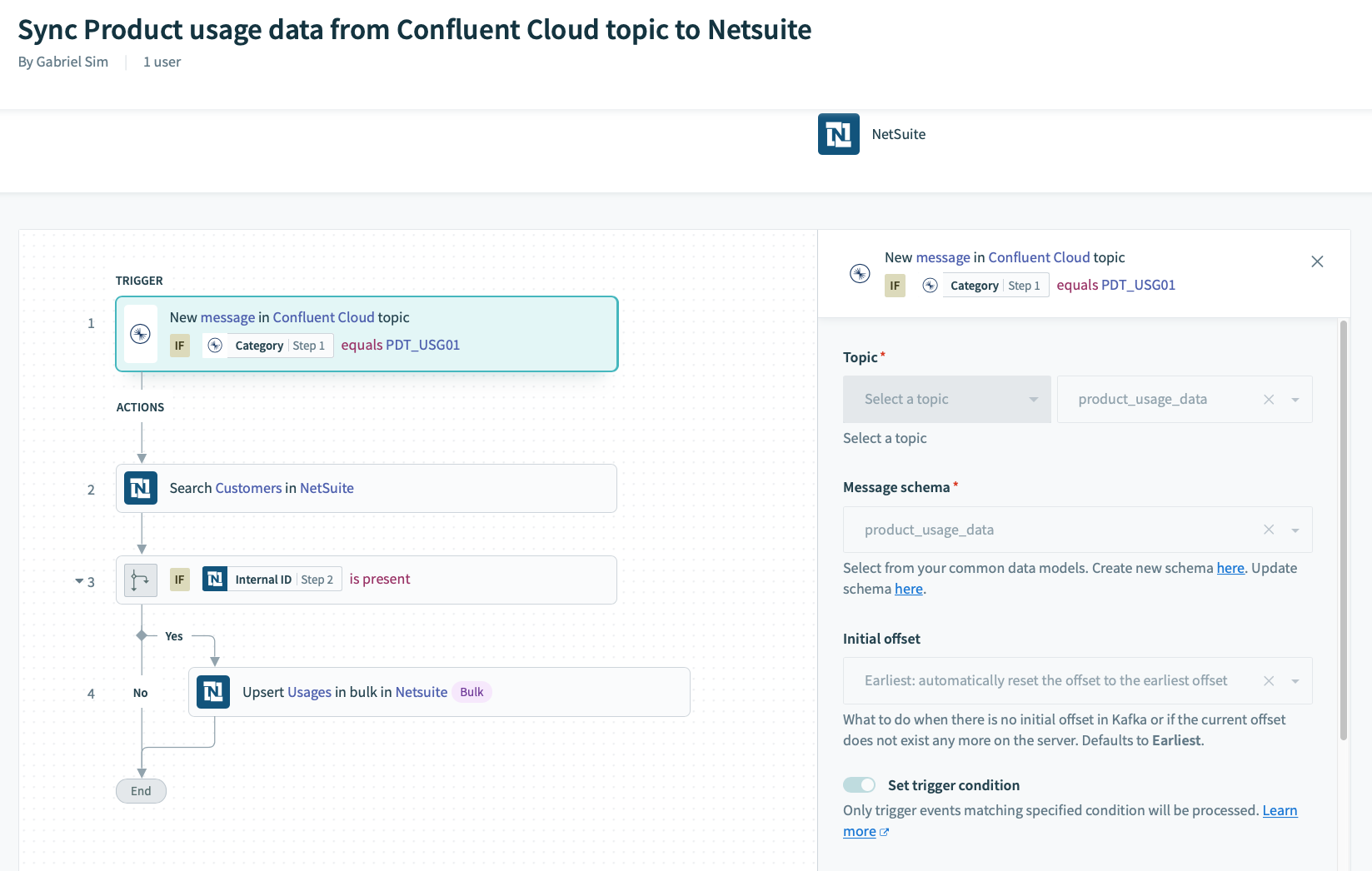

Powering automations with Event Driven Architecture

Kafka and Confluent Connector

Event Driven Architecture (EDA) provide the benefits of scaling faster independently, developing with agility, and cut costs.

With the newly released Kafka and Confluent Cloud connectors, you will be able to send and receive messages from Kafka event streams. By integrating with 1000+ business apps, API, databases or other services you can reliably publish data into a Kafka event stream.

Automations with Confluent

Additionally, the connector also enables you to consume events from the Kafka streams to create automations, micro services, real-time data pipelines.

Stay up-to-date on product features

We hope you’ve enjoyed this edition of Product Updates! Please visit our changelog for all of the latest updates.